Contention Window Optimization.

The proper setting of contention window (CW) values has a significant impact on the efficiency of Wi-Fi networks. Unfortunately, the standard method used by 802.11 networks is not scalable enough to maintain stable throughput for an increasing number of stations, yet it remains the default method of channel access for 802.11ax single-user transmissions[1]. Therefore, in [1] the authors propose a new method of CW control, which leverages deep reinforcement learning (DRL) principles to learn the correct settings under different network conditions. Their method, called centralized contention window optimization with DRL (CCOD), supports two trainable control algorithms: deep Qnetwork (DQN) and deep deterministic policy gradient (DDPG). They demonstrate through simulations that it offers efficiency close to optimal (even in dynamic topologies) while keeping computational cost low.

The authors published their simulation code in the name “RLinWiFi” at GitHub repository[2]. This article tries to repeat their experiments on ns3-gym which was installed in a chroot jail environment. This article explains the same procedure presented in [2]; but, this procedure is done under chroot jail based installation.

Installing Dependencies

Installing ns3-gym

Since we already installed ns3-gym as described in our previous article, we decided to use the same for installing RLinWiFi.

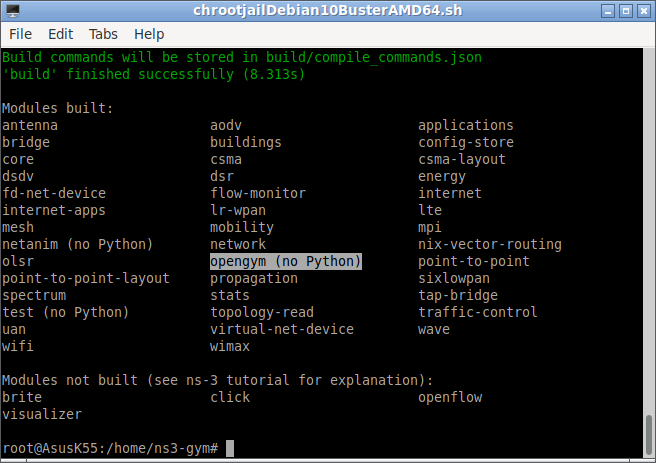

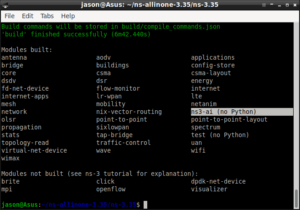

Configuring and compiling ns3-gym again

$ ./waf configure

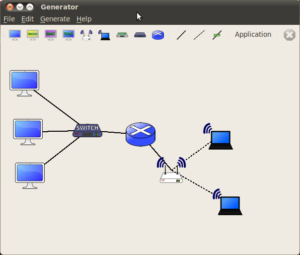

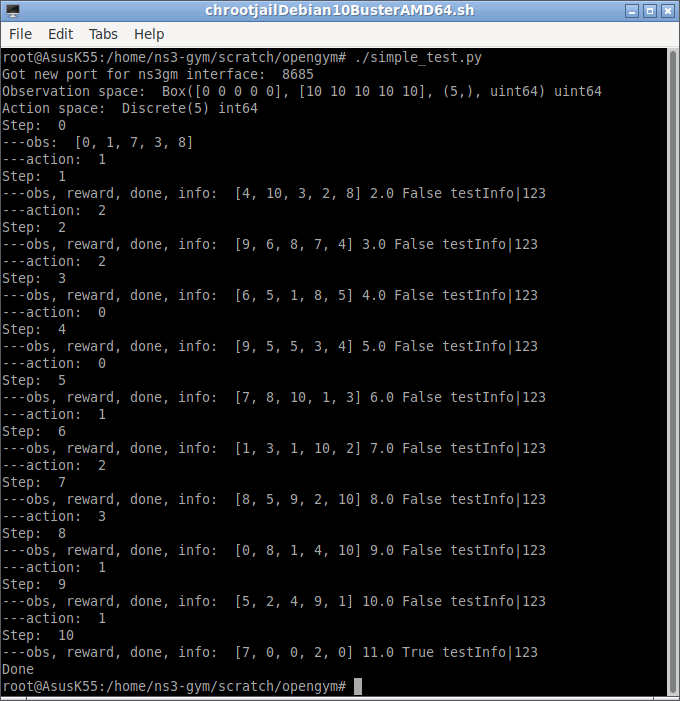

Testing RLinWiFi-Contention Window Optimization

All basic configurations of the agents can be done within the file linear-mesh/agent_training.py (DDPG) and linear-mesh/tf_agent_training.py (DQN).

Example outputs of training (DDPG):

$python agent_training.py

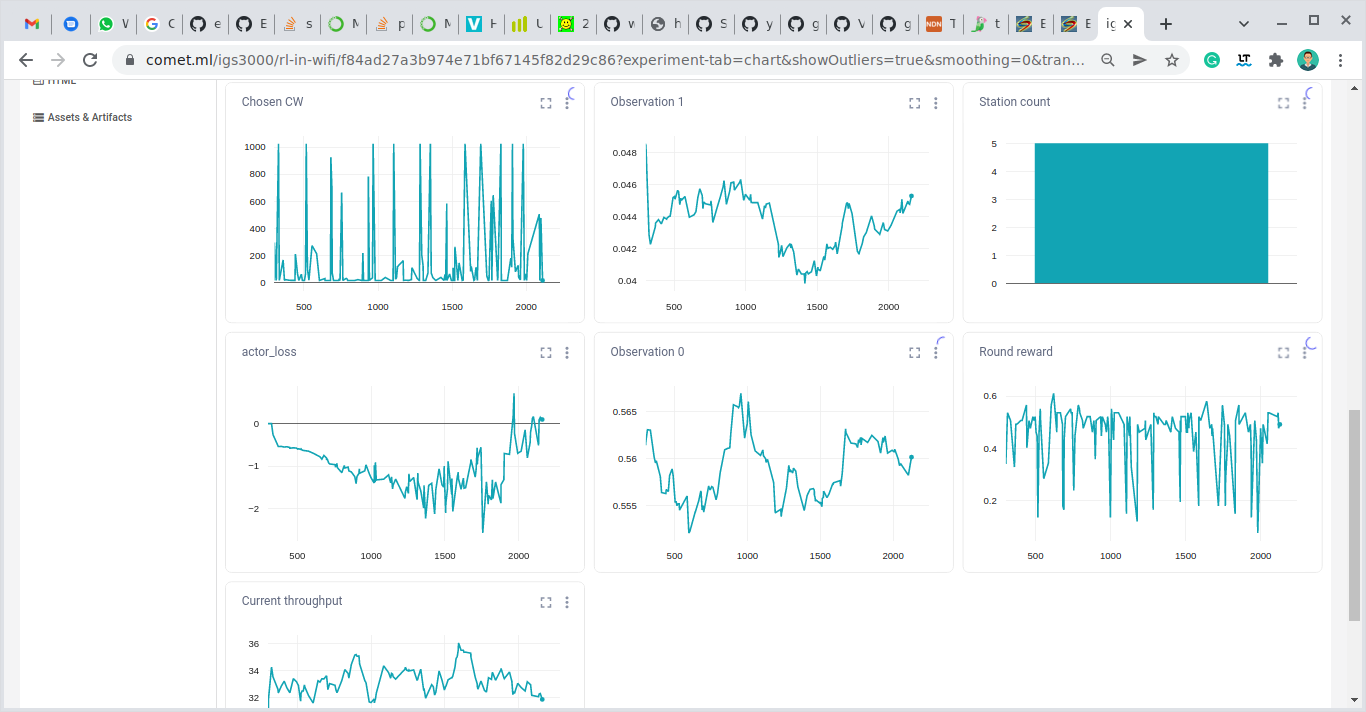

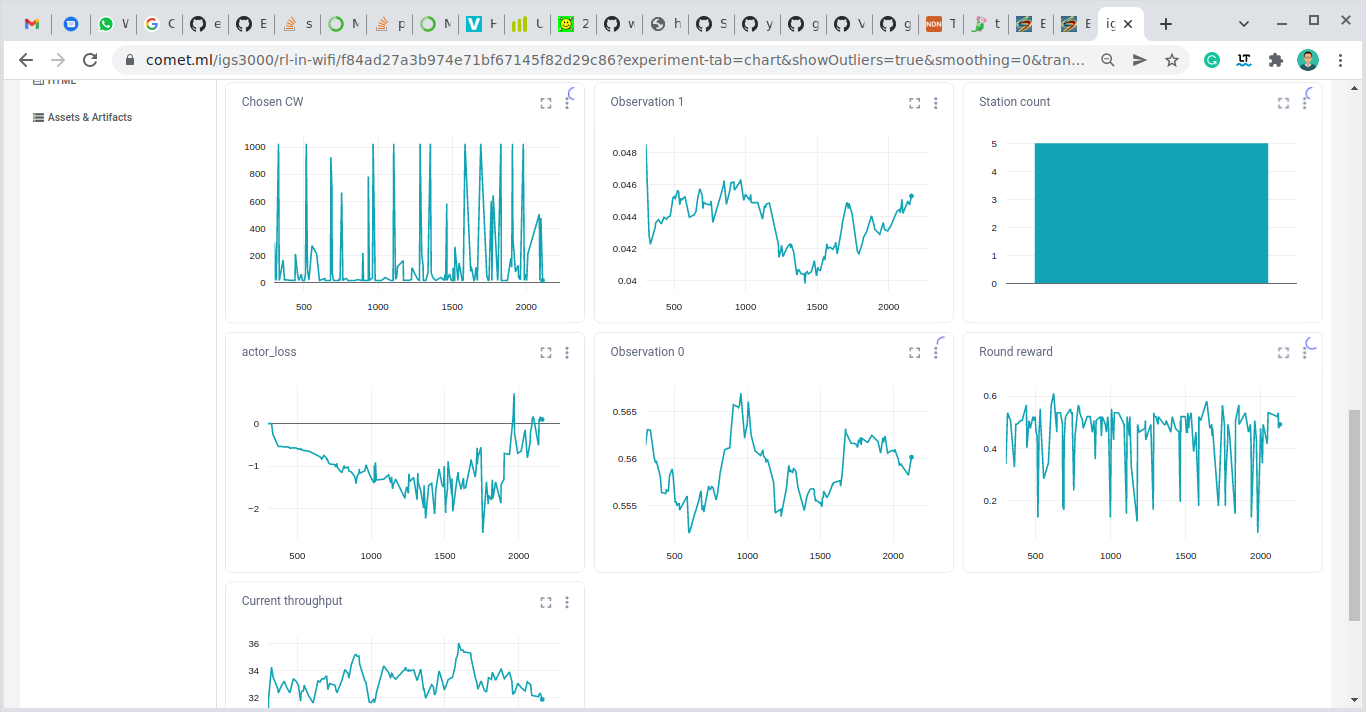

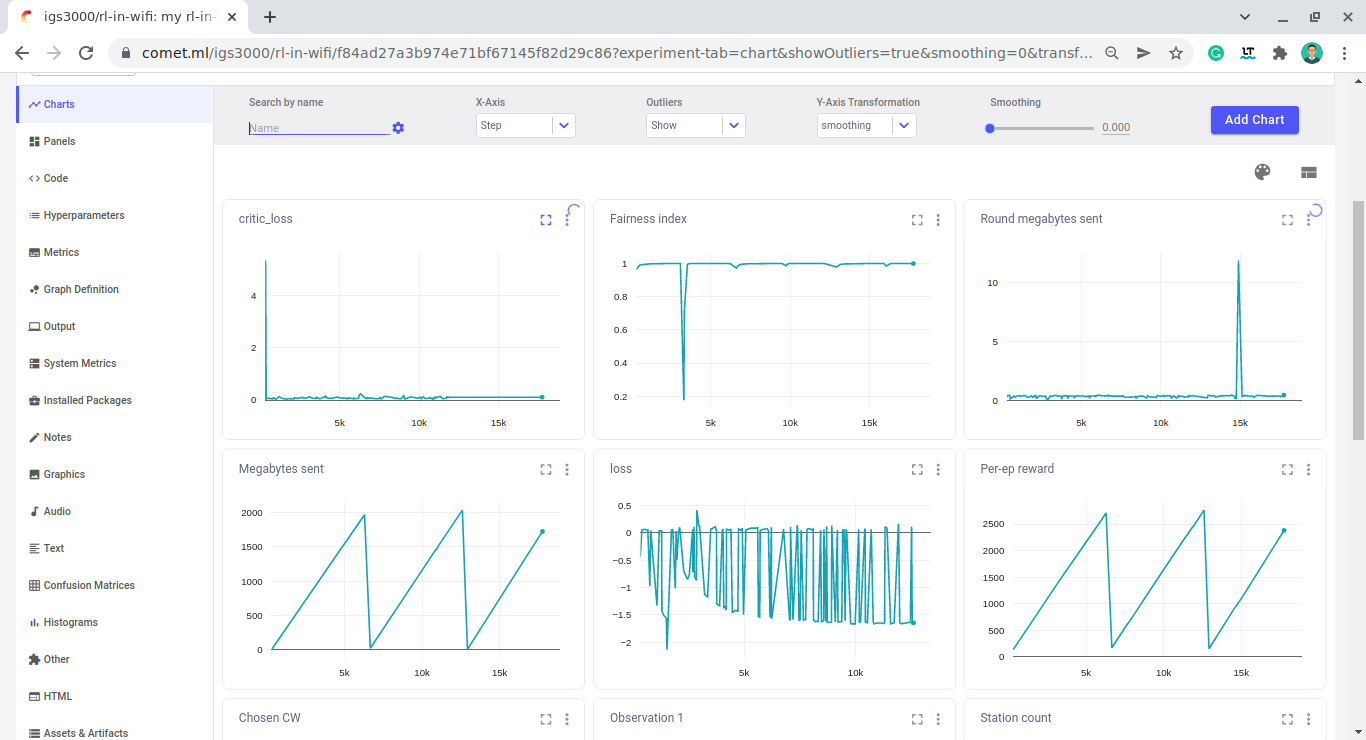

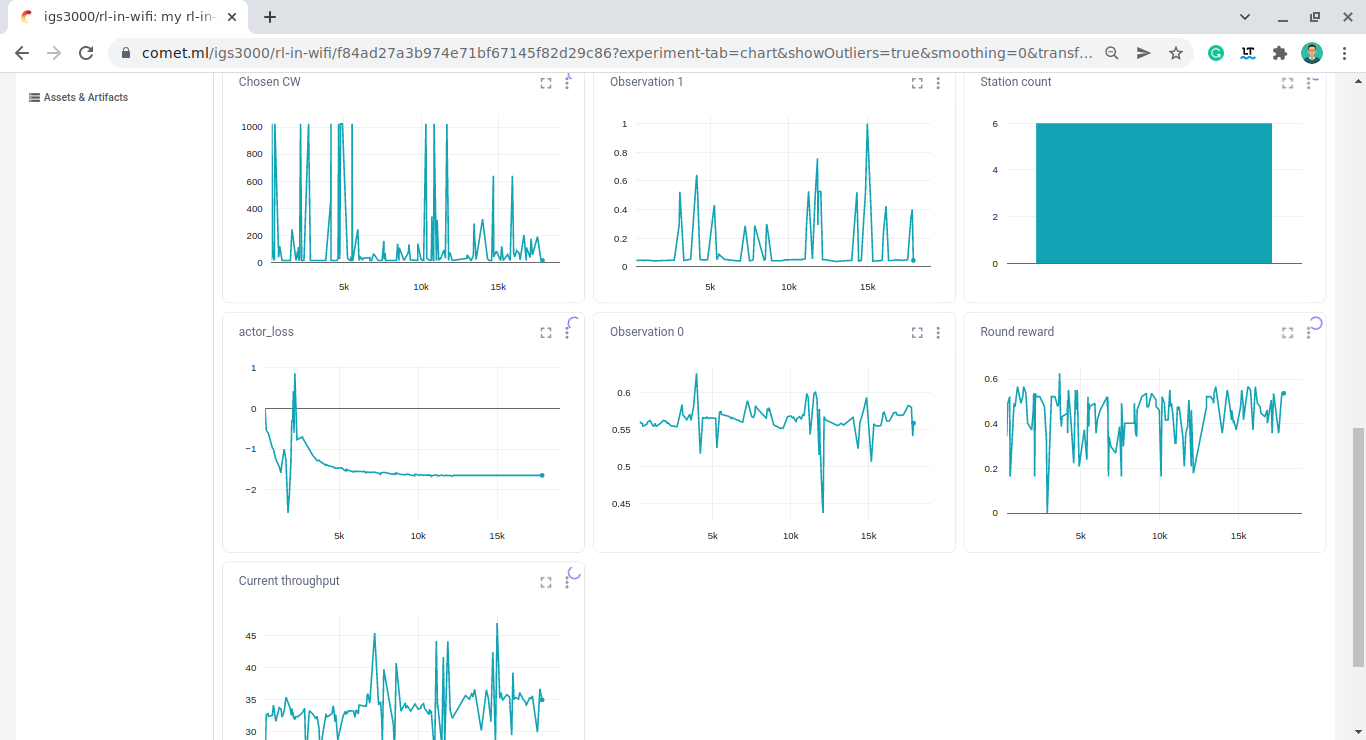

If the CometML setting file comet_token.json file is configured correctly, then we can visualize the progress of training in (somewhat) real-time through the provided web interface.

The following output shows one such real-time output that was logged during training, at my connected CometML account.

The training of one Episode will take a few minutes (maybe an hour with respect to the speed of the machine). Since this script will run the training for three Episodes, then it will take considerable time to complete.

In our test, the overall training of three Episodes took more than an hour(01:03:30). (The following charts shows the performance of 6300 X 3 instances of training)

Running Training for DQN:

Similarly, we can do the training for DQN agent

# DQN agent

python tf_agent_training.py

Running the Benchmark

And the run the benchmark of the static CW values and the original 802.11 backoff algorithm as follows:

python beb_tests.py –beb 5 10 15 –scenario basic convergence

This article is only to provide some help with the installation of ns3-gym and RLinWiFi Contention Window Optimization framework. We may see the real working of RLinWiFi Contention Window Optimization framework in another future article.

References :

- Witold Wydmanski and Szymon Szott, “Contention Window Optimization in IEEE 802.11ax Networks with Deep Reinforcement Learning”, arXiv:2003.01492v4 [cs.NI] 22 Jan 2021, Accepted for IEEE WCNC 2021. © 2021 IEEE

- https://github.com/wwydmanski/RLinWiFi

- https://docs.conda.io/en/latest/miniconda.html#linux-installers

- https://www.comet.ml/

Discuss Through WhatsApp

Discuss Through WhatsApp